Hathora launches Models to power voice AI beyond gaming

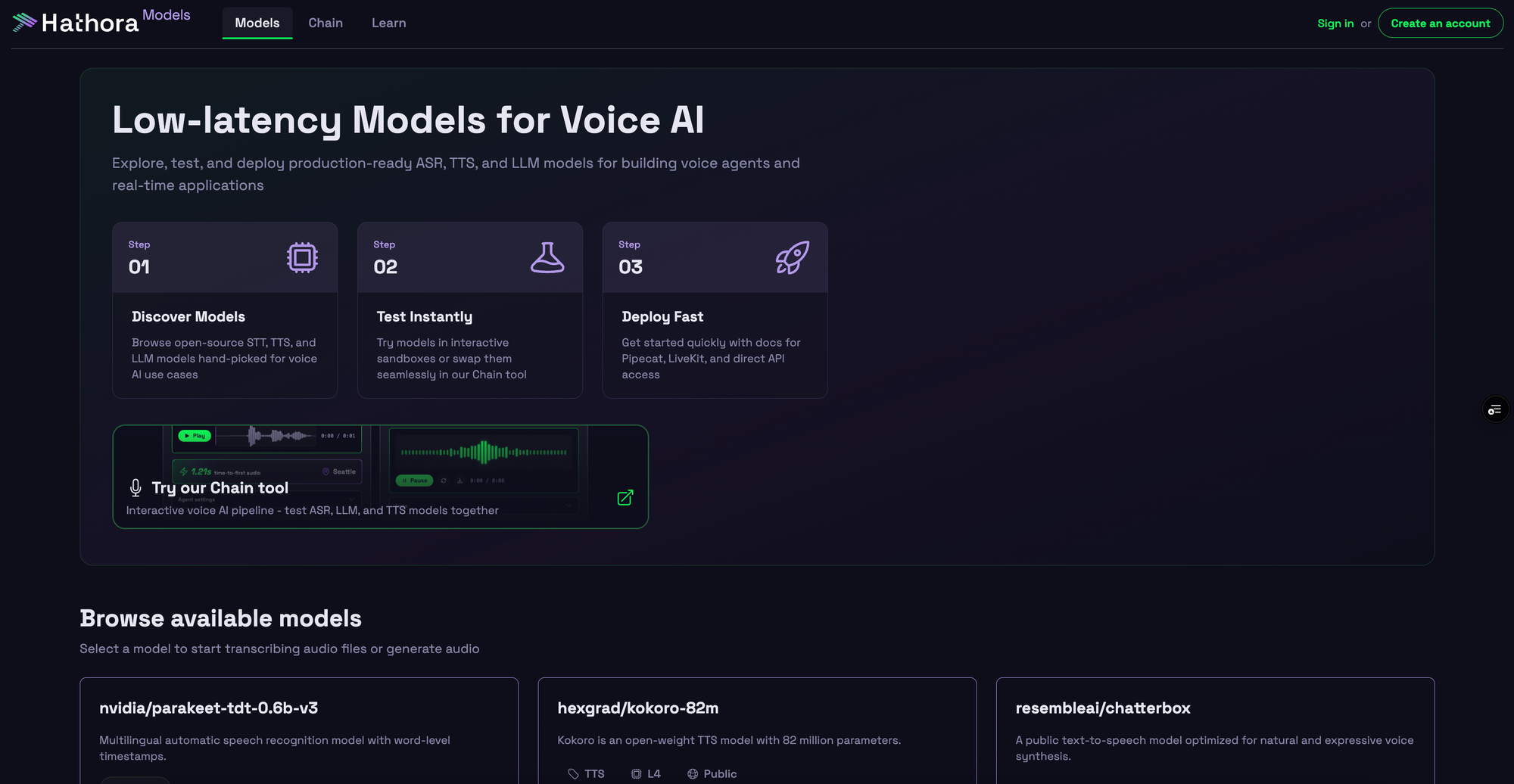

Today is an exciting day in Hathora's journey. I’m thrilled to announce the launch of models.hathora.dev, an inference platform to explore, test, and deploy production-ready ASR, TTS, and LLM models for voice agents. You can try it today and support our launch on Product Hunt.

For years, we've been building the infrastructure backbone for multiplayer gaming, powering everything from indie passion projects to breakout hits. We’re now taking that proven infrastructure platform and expanding it to support the next frontier of interactive experiences: conversational AI for the global market.

Why Voice, and Why Open Source?

We believe voice is the next paradigm shift in how humans interact with AI. Text-based chat, like ChatGPT, unlocked a wave of AI capabilities, but we see the next big unlock for enterprise applications coming from being able to speak to AI directly. Voice interfaces will eliminate language barriers and make interactions more intuitive, unlocking broad enterprise adoption.

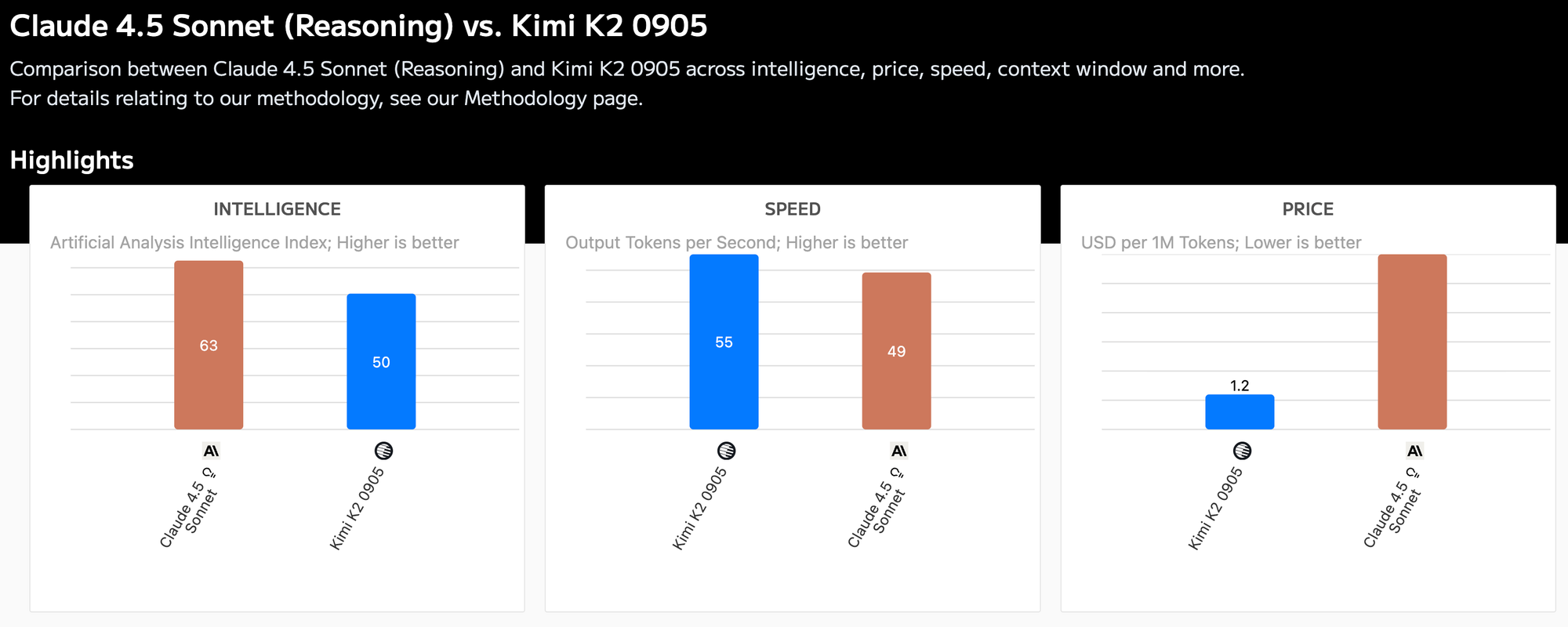

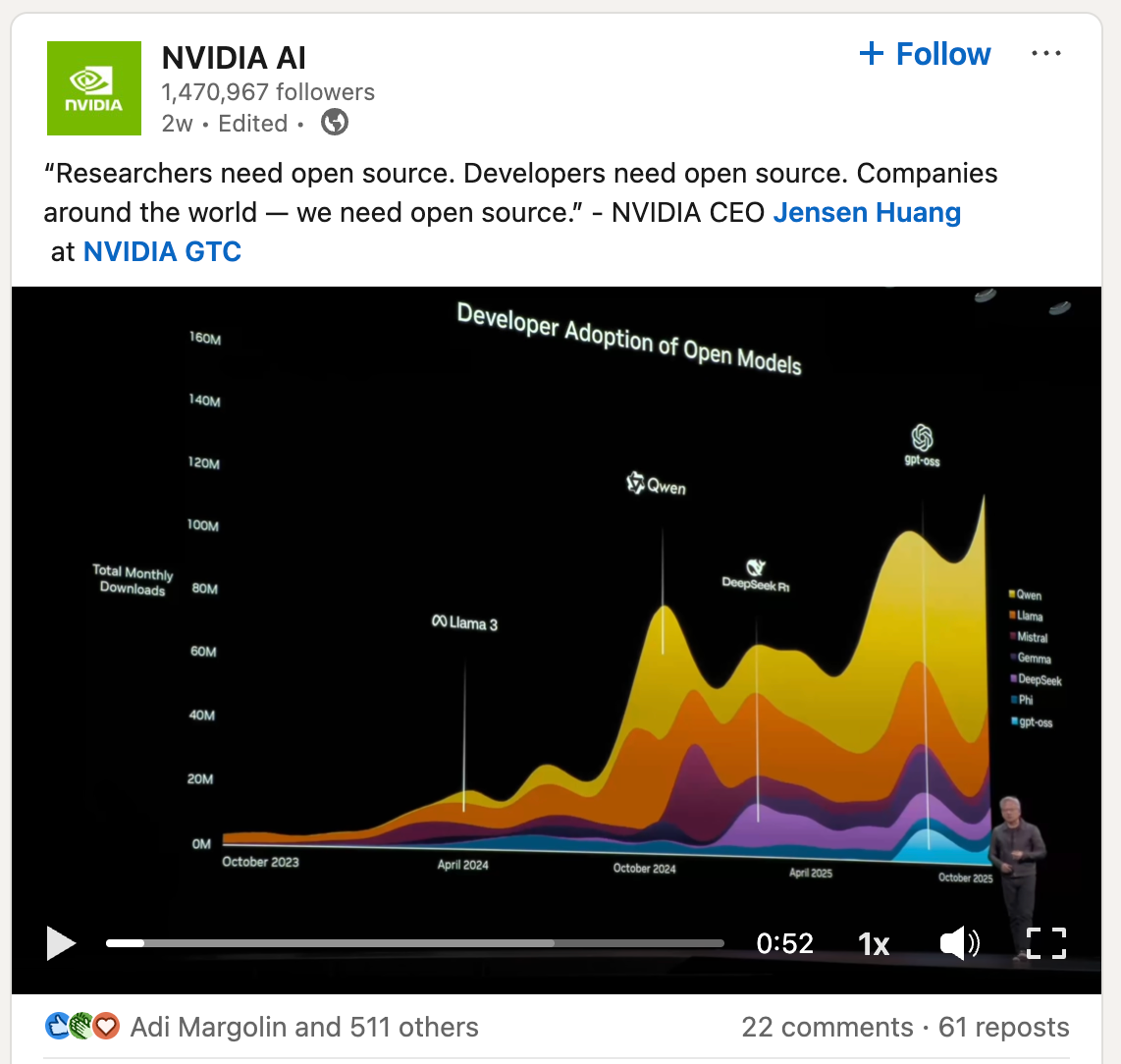

Our money is on open-source models to power this shift. We’ve already seen it in the LLM world: open models are catching up to and even outperforming closed ones. For example, Moonshot AI’s Kimi K2 (a trillion-parameter open model) has proven to give nearly comparable performance at a fraction of the cost to Anthropic’s Claude Sonnet 4.5 on challenging reasoning and coding benchmarks.

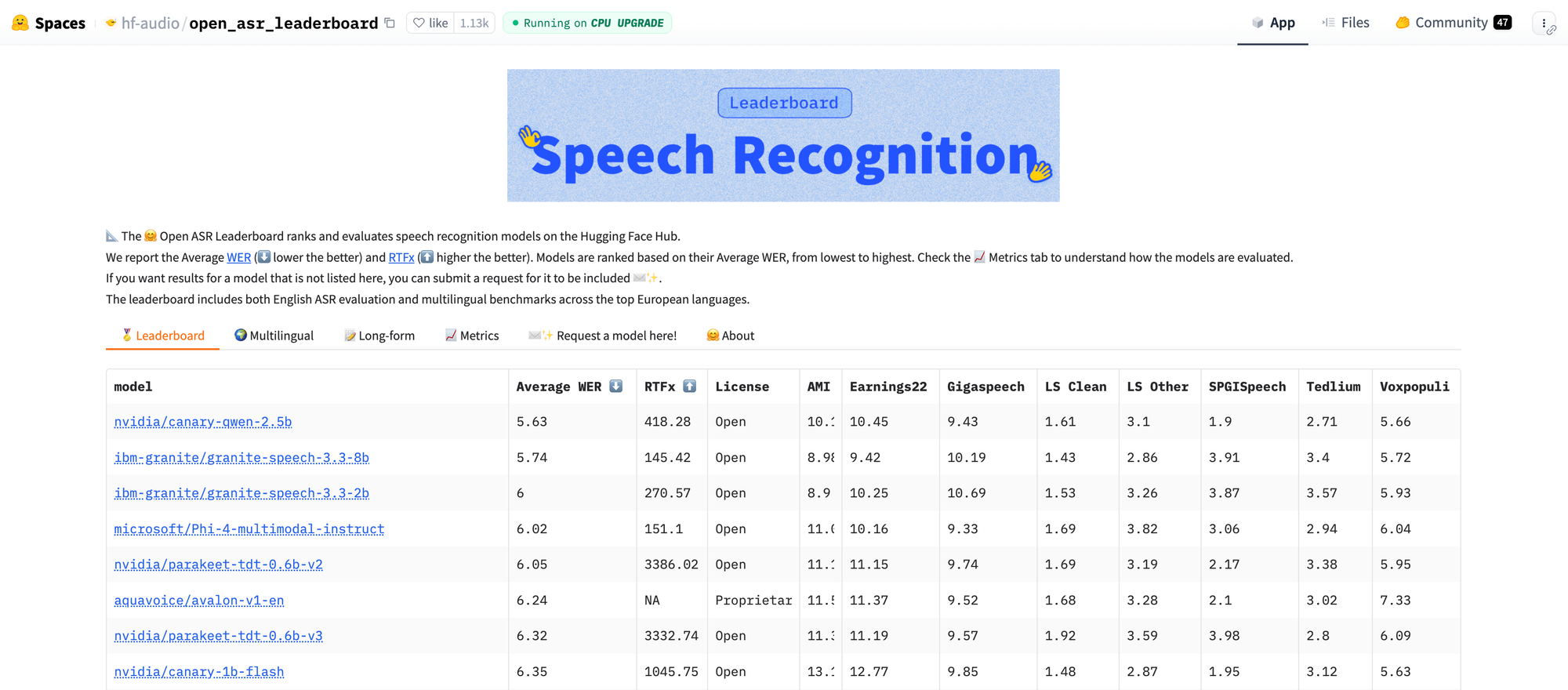

In speech recognition, open ASR models are leading the leaderboards with multiple NVIDIA and IBM models occupying the top 5 rankings. The highest-ranked proprietary service sits down at sixth place.

Large players in the space have been speaking about how open source is the future. For example, NVIDIA’s CEO Jensen Huang has praised open-source AI for “fueling the adoption of AI in enterprises” by allowing companies to build without being gatekept by closed API providers.

ElevenLabs’ own co-founder, Mati Staniszewski, predicts that voice AI models will "commoditize" in the next few years, as differences between proprietary and open models shrink.

We agree, innovation compounds faster when more developers can experiment without permission.

What We’ve Built

We’ve built a model marketplace that lets you explore, test, and deploy ASR, TTS, and LLM models for your voice agents without touching infrastructure.

Key features include:

- Automatic geo-routing: Requests hit the nearest region for minimal round-trip time.

- Elastic autoscaling: Scale from zero to peak traffic automatically.

- Pay-as-you-go: Pay only for tokens or audio processed, never for idle GPUs.

- Dedicated endpoints: Deploy to 14 global regions, close to users.

The platform abstracts away the hard parts: hardware provisioning, GPU scheduling, autoscaling, metering, and routing.

Why This Matters

Most AI teams hit the same roadblocks: infrastructure that buckles under load, pricing that limits iteration, and time lost managing GPUs instead of improving products.

With models.hathora.dev, deploying AI globally becomes:

- Instant: Launch or expand to new regions in seconds.

- Affordable: Scale cost-effectively with usage-based pricing.

- Invisible: Infrastructure that fades into the background and just works.

Our goal is simple — remove the friction between your ideas and production-ready AI. We can’t wait to see what you build.