Stateful Routing: When Load Balancers Don’t Work

When it comes to scalable backend architecture, the load balancer has long reigned supreme. The best practices always say your backend services should be stateless so that you can scale up multiple replicas and stick them behind a load balancer, achieving horizontal scalability and high availability.

For 99% of the cases, this is the correct approach – but every rule has an exception. For apps that require long lived connections or require in-memory state (e.g. collaborative apps like Figma, or multiplayer games), load balancers are not the most optimal way to scale them.

There's surprisingly little information online about the most effective pattern to scale these kinds of real-time apps – a technique which I like to call stateful routing. This post goes into detail about why a specialized solution is required, and is accompanied by this simple chat demo repo which showcases stateful routing in practice.

Load Balancers 101

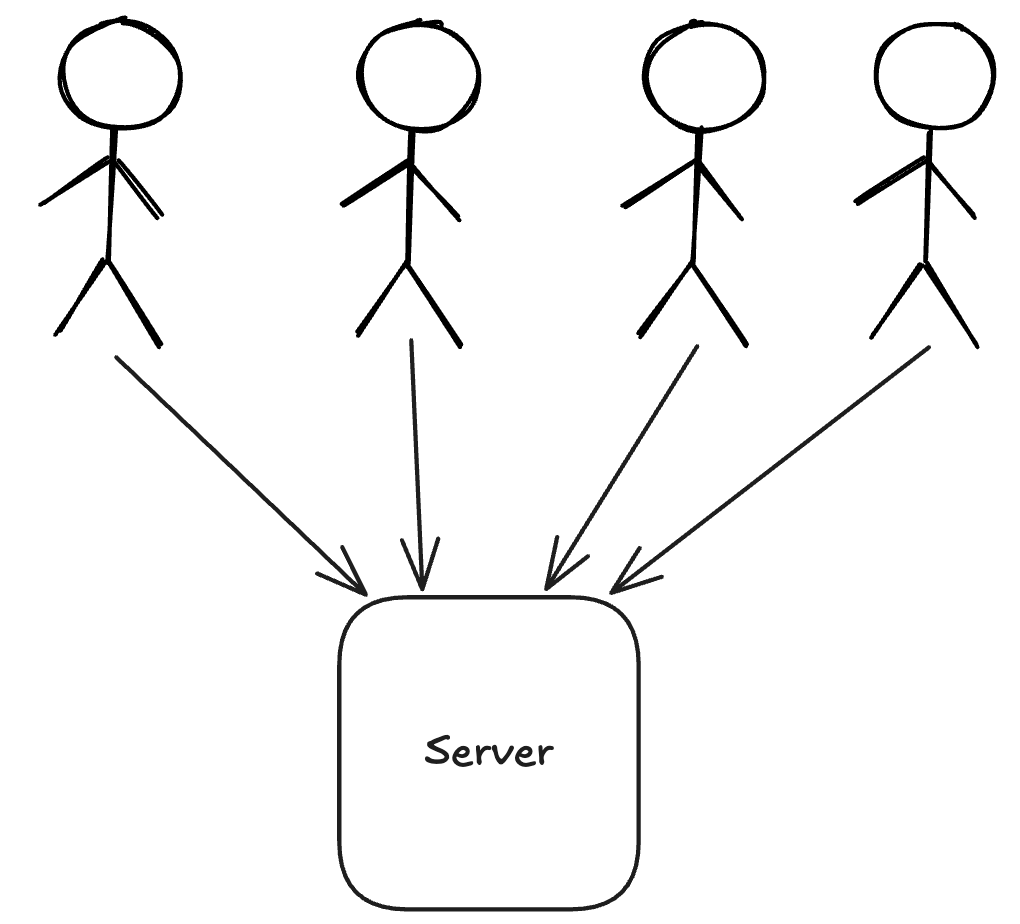

To understand what load balancers do, let's first consider a simple web service without one:

A single server instance can only handle so much load, so at a certain number of users it will begin to get overloaded.

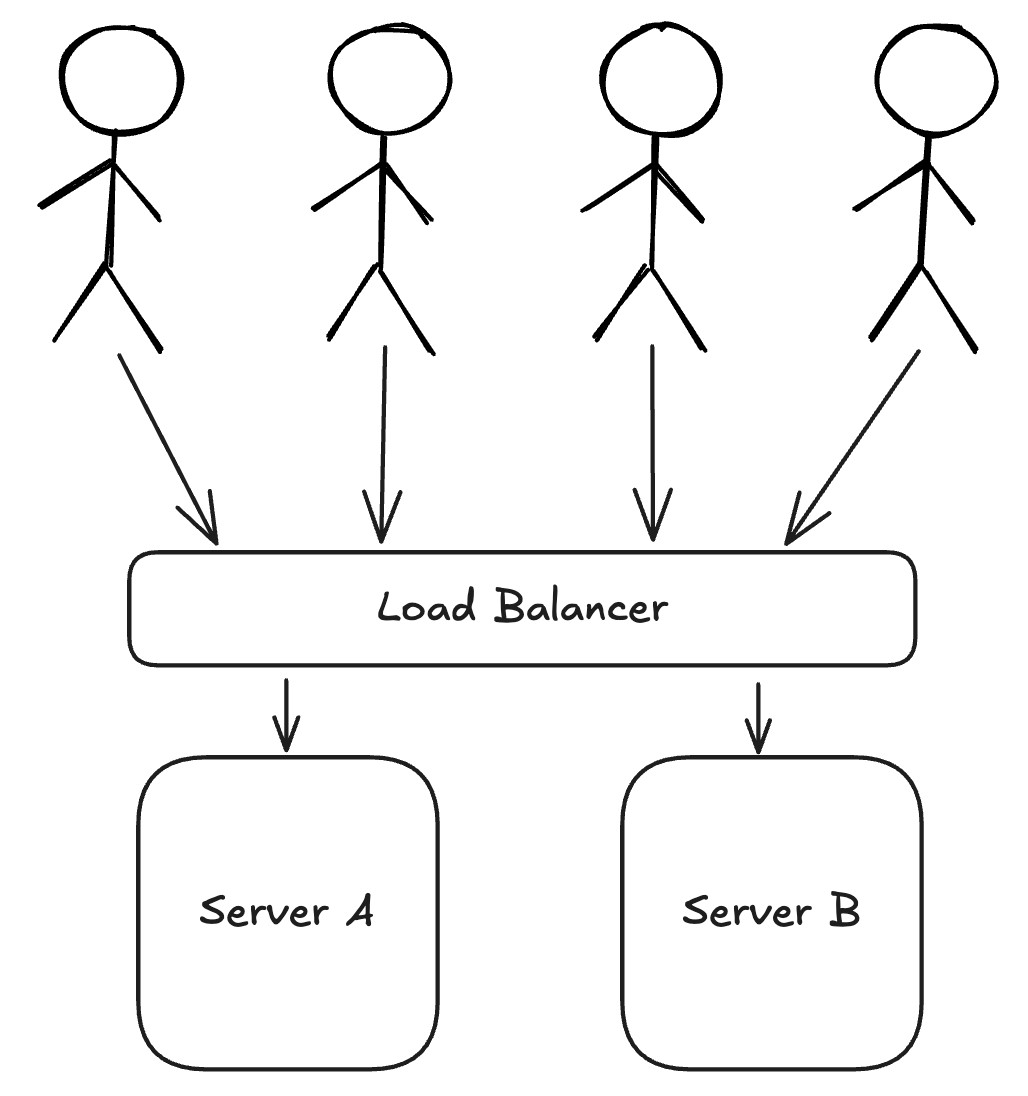

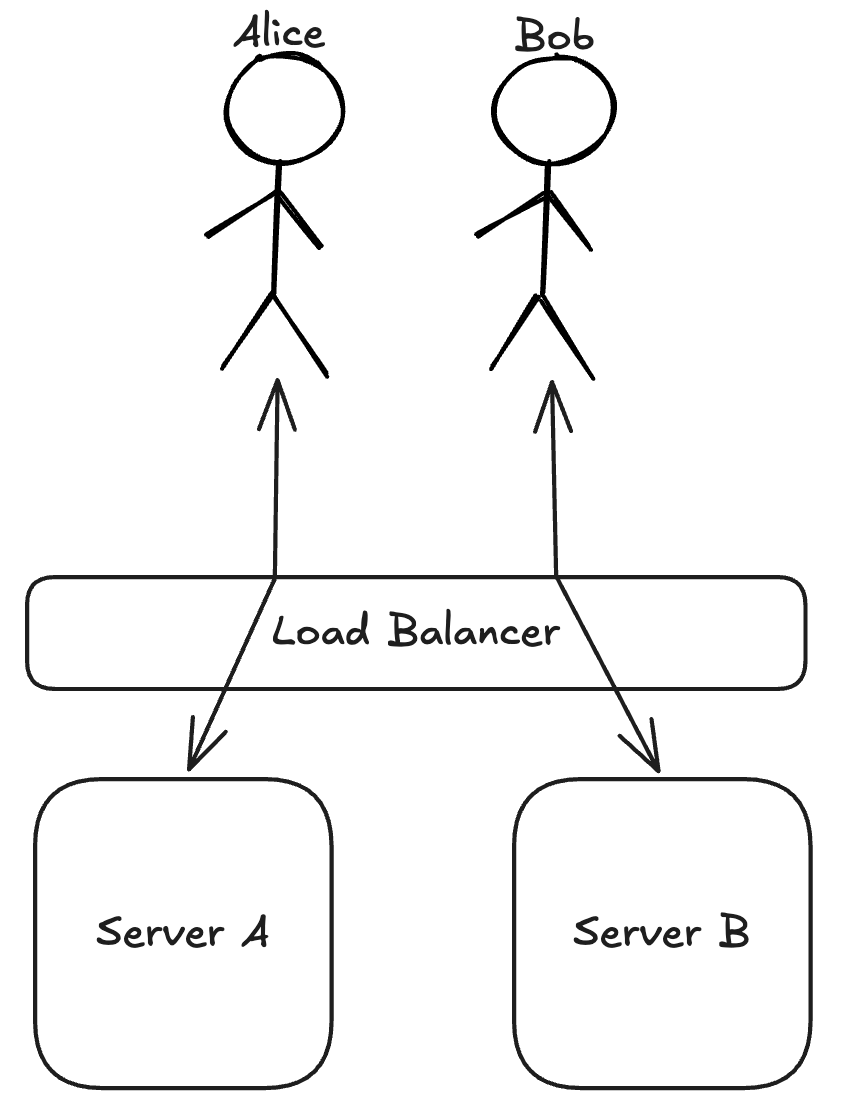

In order to be able to handle more traffic, you need more server instances. However, this introduces a problem: how do users know which server to connect to? This is where load balancers come in – they provide a single entry point for users, and distribute the requests evenly across multiple server instances:

This model works great as long as every server instance is stateless and interchangeable. A request can land on any instance, and you’ll get the same result. If an instance goes down, the load balancer simply sends traffic elsewhere.

Why This Breaks Down for Real-Time Apps

The "stateless and interchangeable" assumption holds true for the majority of backend services, like API services backed by a database. However, Real-time apps aren’t made of isolated requests—they’re made of ongoing relationships between users.

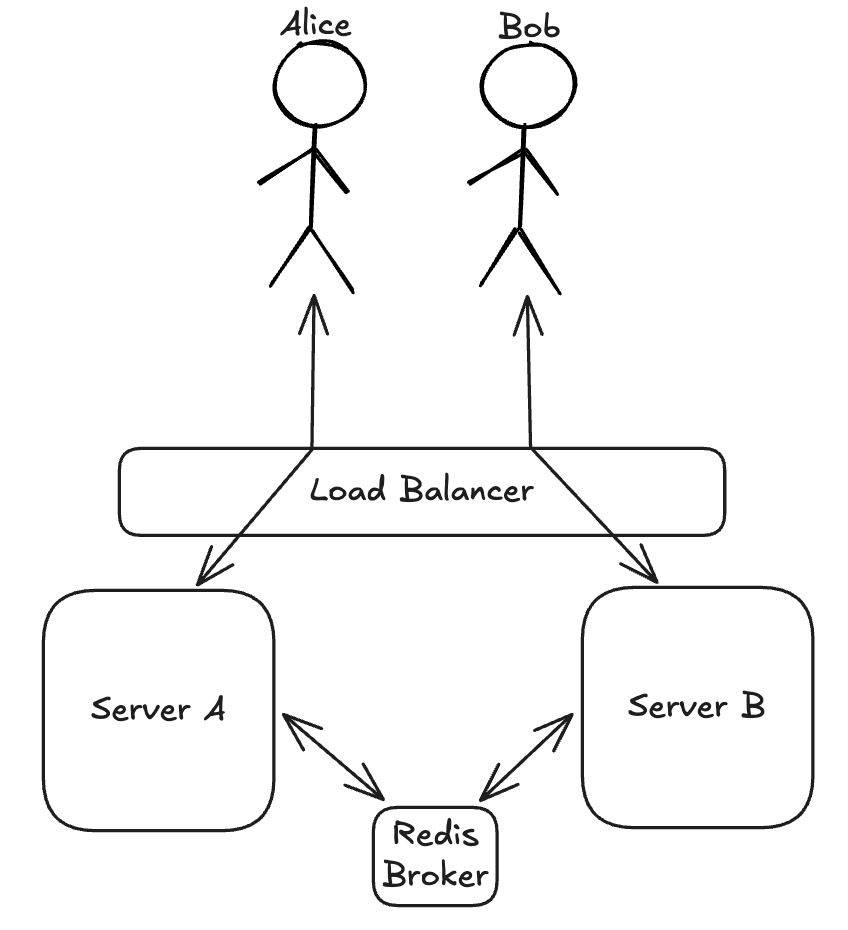

Think about a shared whiteboard or a group chat: when one user makes an update, the server needs to broadcast that change to everyone part of the same "room". In order to receive real-time updates from the server, users usually establish long-lived connections (usually WebSocket). Apply load balancers to this, and a problem emerges: broadcasts break down if users are split up amongst different server instances.

With WebSocket connections, the server instances are no longer stateless and interchangeable – the long-lived connections belong to specific instances and thus introduce a type of local state. In order to make broadcasts work, either (1) the server instances need a way to communicate with one another, or (2) interacting users need to be connected to the same instance.

The Message Broker Workaround

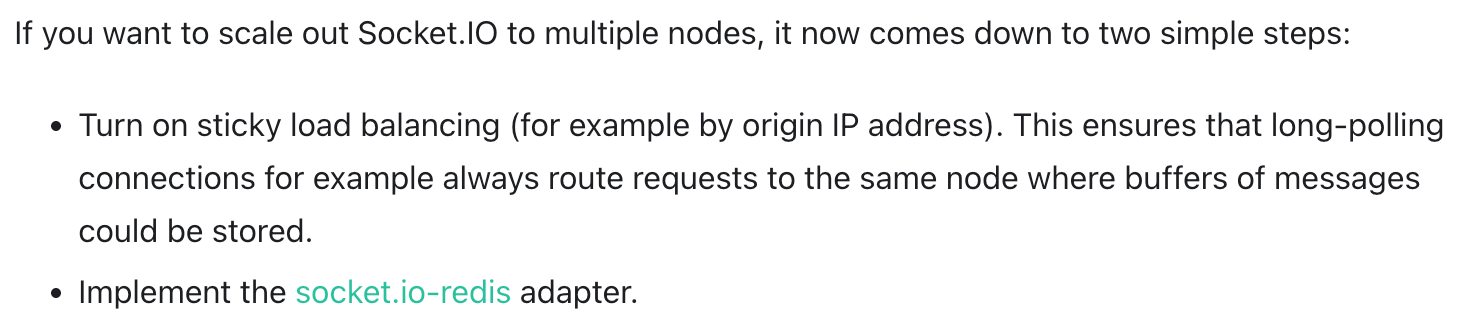

The vast majority of resources online only cover option (1) above, most commonly via a message broker (like Redis or NATS) to relay updates between servers. For example, it's what Socket.IO (most popular WebSocket library with 8M+ weekly NPM downloads) recommends:

Each server instance subscribes to the broker to receive broadcasts from other instances, and then relays the broadcasts to any local connections for a given room. So now the message path becomes Alice -> Server A -> Redis -> Server B -> Bob:

This solution works, but it essentially pushes the scaling challenge down to the broker. The key insight to realize is that every single message in the system needs to go through the message broker. So while you can add more server instances to reduce the percentage of traffic that each individual instance needs to handle, your message broker still needs to handle 100% of the traffic. Message brokers can be run in clusters and scaled themselves, but this adds extra complexity and cost. Companies like Trello have written about challenges they've faced when scaling this way:

The Socket.io server currently has some problems with scaling up to more than 10K simultaneous client connections when using multiple processes and the Redis store

The main reason message brokers are often cited as the solution for scaling real-time backends is that they work well with load balancers, which are so prevalent that they tend to be an upfront assumption. But in my view, using brokers in this way is more of a workaround to solve the problem that load balancers introduce. By getting rid of the load balancer assumption in the first place, there is a much more elegant solution that emerges.

A Better Approach: Stateful Routing

While load balancers typically distribute traffic randomly amongst the underlying server instances, the alternate approach involves routing interacting users to the same server instance. Figma has written about how they use this for their core collaboration features:

We use a client/server architecture where Figma clients are web pages that talk with a cluster of servers over WebSockets. Our servers currently spin up a separate process for each multiplayer document which everyone editing that document connects to

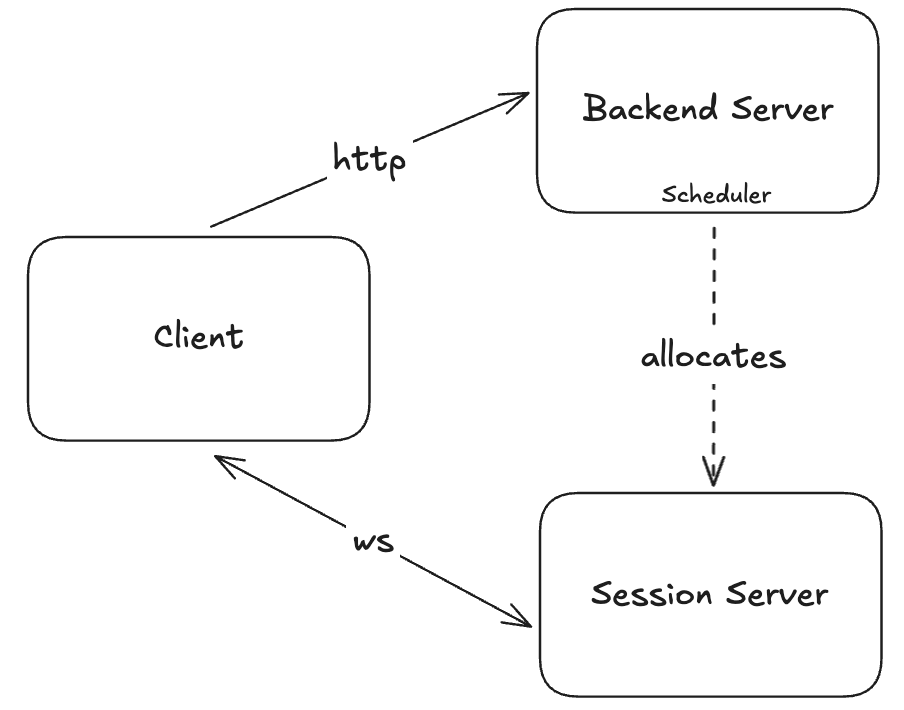

I haven't seen a standard name for this, but I like to call it stateful routing as a contrast to the typical "stateless routing" that load balancers offer. In this pattern, users don’t hit a generic load balancer. Instead, they’re routed directly to the specific real-time server that corresponds to their room or session:

That means:

- All users in a shared room connect to the same server.

- That server is the source of truth for that room’s state.

- No need for cross-server messaging, brokers, or duplicated state.

Now when Alice sends a message, the server can broadcast it to everyone connected to the same room. It’s fast, simple, and clean.

How to Implement Stateful Routing

To showcase stateful routing in practice, I created a sample chat application. There are two main implementation challenges to solve:

- Dynamic room assignment

- Direct session ingress

Dynamic room assignment

The core tenant of stateful routing is that each room/session belongs to a particular server instance, and therefore you need a way to assign newly created rooms to an instance. This allocation process is typically referred to as "scheduling".

The repo has a simple scheduler interface:

interface Scheduler {

// assigns a new room to a session server instance, and returns its roomId

createRoom(): Promise<string>;

// returns the session server host corresponding to a given roomId

getRoomHost(roomId: string): Promise<string | null>;

}When it comes to room assignment (i.e. createRoom), there are two main components involved: knowing what targets (session server instances, in this case) are available, and a method to picking one of the targets for assignment.

The repo contains two scheduler implementations: the StaticScheduler for local development and the HathoraScheduler for production. The StaticScheduler takes in a list of static session server hosts (e.g. localhost:8000,localhost:8001), and randomly assigns each new room to one of them. The HathoraScheduler uses Hathora Cloud to dynamically create session server instances on demand.

Direct session ingress

The other key tenant of stateful routing is that each server instance must be publicly routable, so that clients can connect directly to the individual instances. This can be surprisingly tricky to set up since most hosting services have a built-in assumption of a load balancer, and don't offer direct public routing to individual instances.

With the HathoraScheduler, each process gets a unique host + port to connect to, e.g. abcdef.edge.hathora.dev:40123, which routes to the underlying container running on e.g. 127.0.0.1:8000.

Combining dynamic room assignment and direct session ingress, this is what the typical architecture looks like:

To see it all in action, you can try the sample chat application live demo.

Final Thoughts

This post felt important for me to write because of the lack of information online about scalable real-time architectures for web applications. What little information does exist usually only mentions message brokers as a scaling solution, so I wanted to present Stateful Routing as an alternative which companies like Figma are known to use.

What's interesting is that this type of architecture is highly pervasive and well known in the gaming industry. Most competitive multiplayer games do some form of stateful routing, where a matchmaker backend allocates a session for a new match, and players connect directly to the session server to play. Hopefully this post increases awareness of this pattern, and we can see more applications outside of gaming start to adopt it.