August 2025

Over the last few months, we've built on the strong foundation we created for game servers and expanded to serve more use cases. Our recent launch of Horde UBA on Hathora was the first step, and since then we’ve delivered new ways to put our hybrid multi-cloud platform in the hands of more users.

One of our next steps is to support both CPU and GPU workloads with the same level of reliability and scale. This month, we released features that enable GPU orchestration, strengthening our platform for AI use cases while also directly benefiting our gaming customers.

Studios are already exploring how AI will shape their workflows, and we’re here to power that shift. Hathora has always lived at the intersection of creativity and technology, and expanding into new verticals only makes us stronger in what we offer you. Let’s dive in 🤿

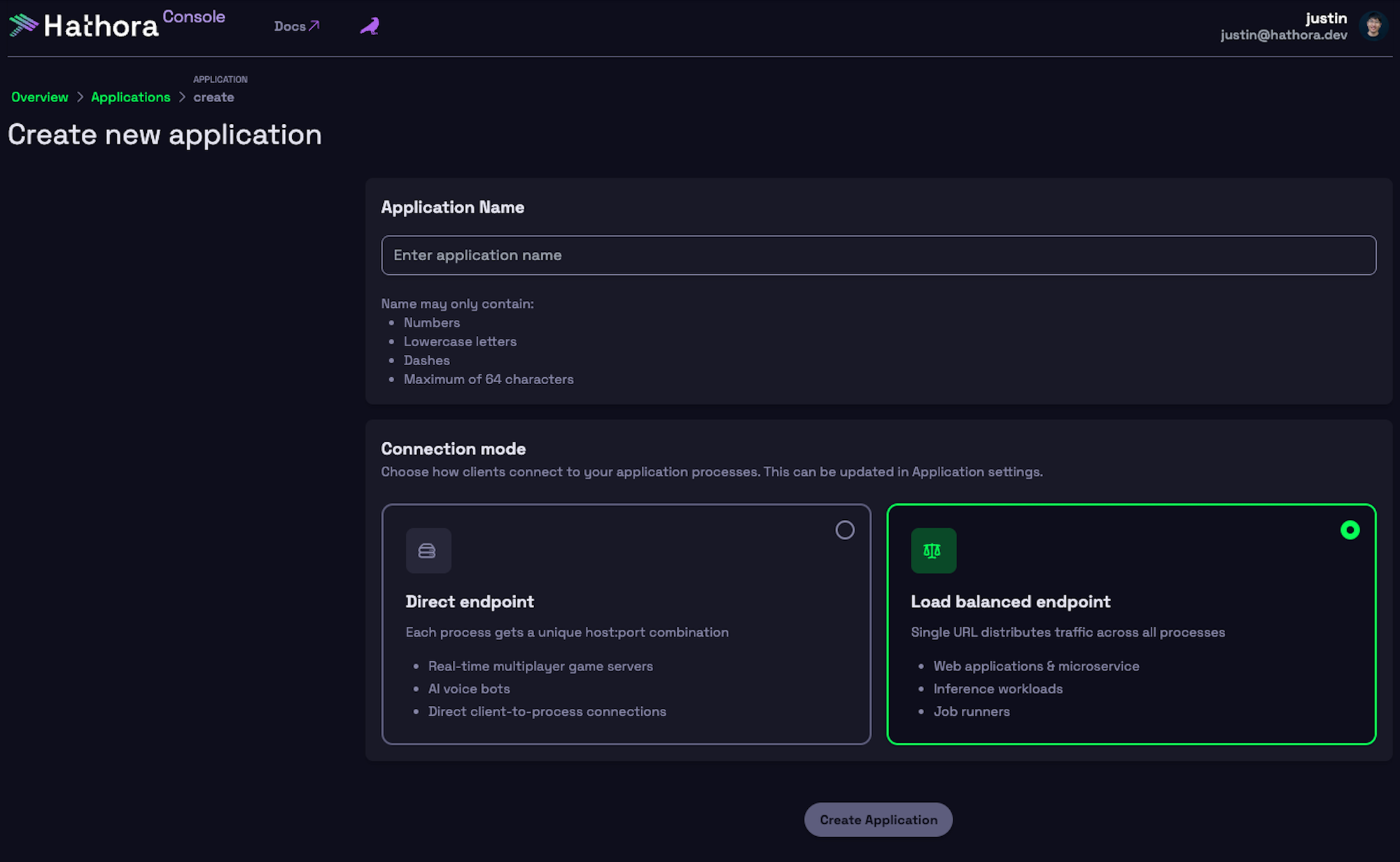

🏋️♀️ Load-balanced apps

You can now run load-balanced applications on Hathora. When creating or updating an app, you can set its type to Load-balanced. Any processes that spin up in that application can be reached through a single load-balanced URL, with traffic automatically routed at the edge to the nearest available process. For now, load-balanced apps accept only TCP traffic, which we automatically secure with TLS.

🛺 Process autoscaling

Load-balanced apps also support autoscaling. You can allocate processes by region, choose whether each region should autoscale, and set minimum and maximum process counts. If autoscaling is disabled for a region, Hathora maintains a fixed number of processes without going above or below your target.

Today, autoscaling is driven by the concurrent-requests metric. The load balancer tracks the number of concurrent requests that a single Process handles and autoscale processes accordingly.

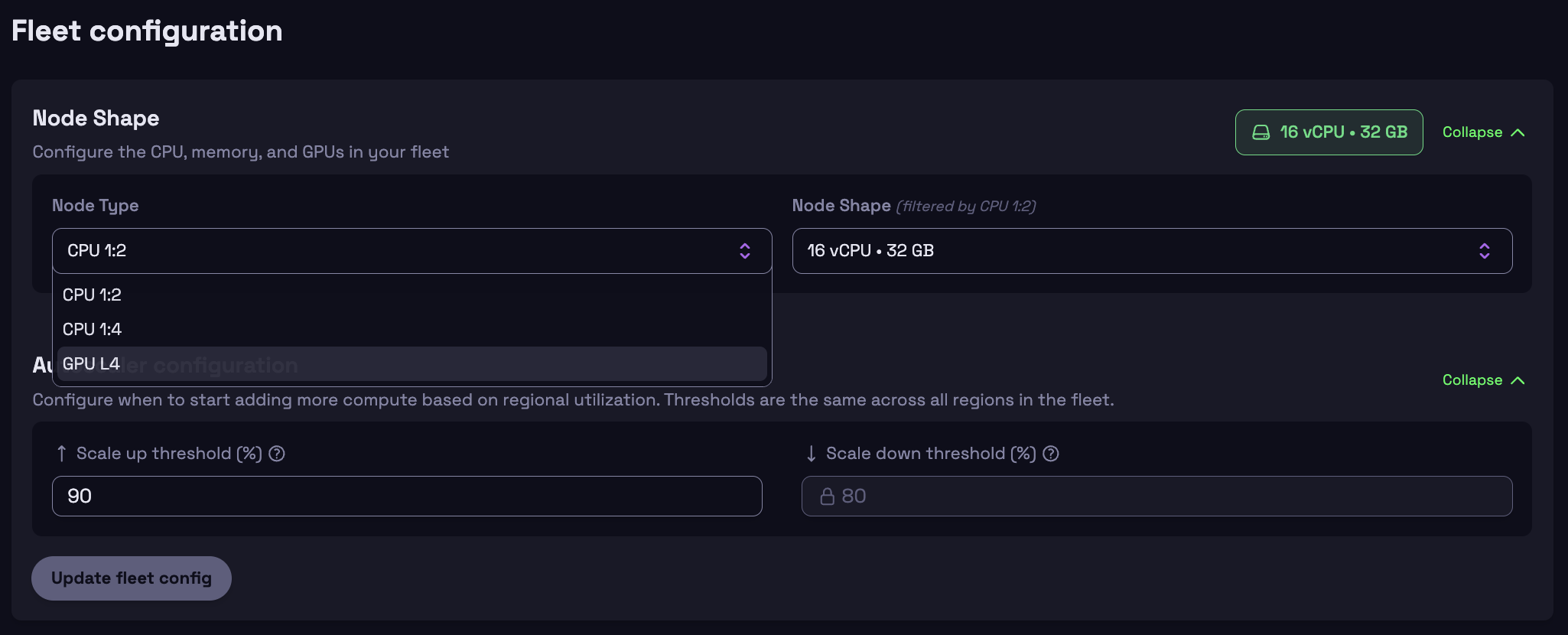

🎚️ Configure cloud node shape

You can now configure the node shape of your fleet directly from the console. Choose from CPU nodes with different CPU-to-memory ratios (1:2 and 1:4) or GPU nodes. We currently support L4 GPUs, with additional GPU options coming in the next few weeks.

We’re also working on multi-fleet support, so you’ll soon be able to run different node shapes within the same account.