So, how much slower are containers?

Containers have become the gold standard of packaging, distributing, and running applications. The world of gaming, however, has been slow to adopt containers because performance (both CPU and network) is so important to providing a good player experience. Understandably so, since game servers must complete intense computations within narrow time windows (less than 16ms for a 60FPS game) to prevent dropped frames. But now more and more studios are starting to embrace containers.

We wanted to know: are containers worth the performance cost?

At Hathora, we’re building a highly scalable orchestration offering for running dedicated game servers and containers are a core part of our architecture. We’ve seen, first-hand, that containers can work well for modern competitive games like shooters – even those with high performance needs. But we wanted to find hard data to fully understand the overhead costs.

We’ve scoured the internet for data on how containers affect compute and network performance. We couldn't find much beyond an IBM research paper from 2014. So, we decided to do our own tests to figure out how containers stack up against virtual machines and bare metal. Even if you’re not using containers for game servers, this should still be quite informative!

tl;dr

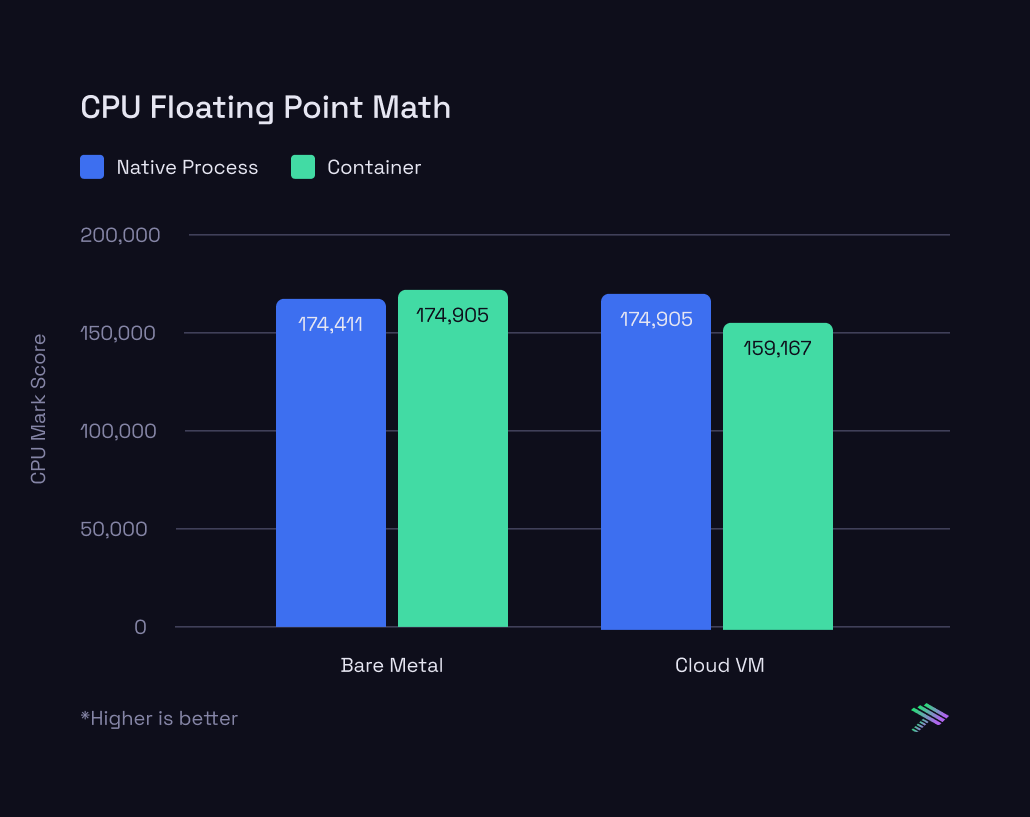

Cumulative compute performance scores (CPU Mark) between containers and native processes are remarkably consistent (+/- 0.1%). However on the cloud VM, specific workloads like Physics, Floating Math and Sorting had a higher variance, albeit without a clear winner.

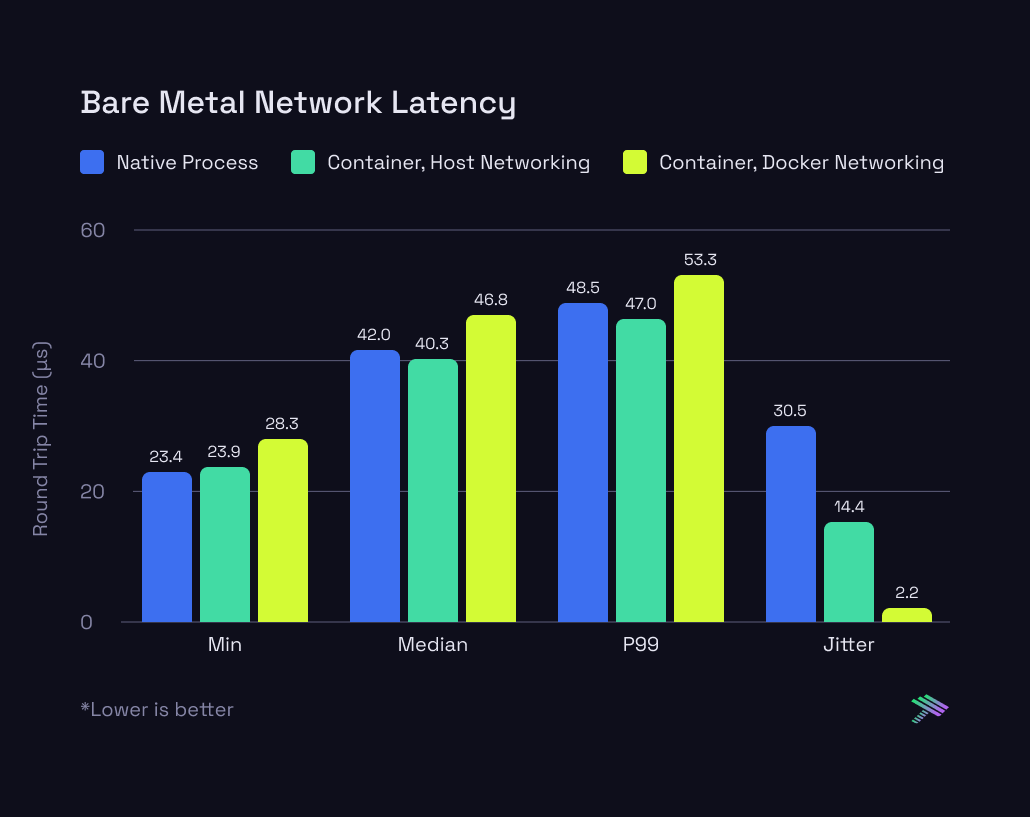

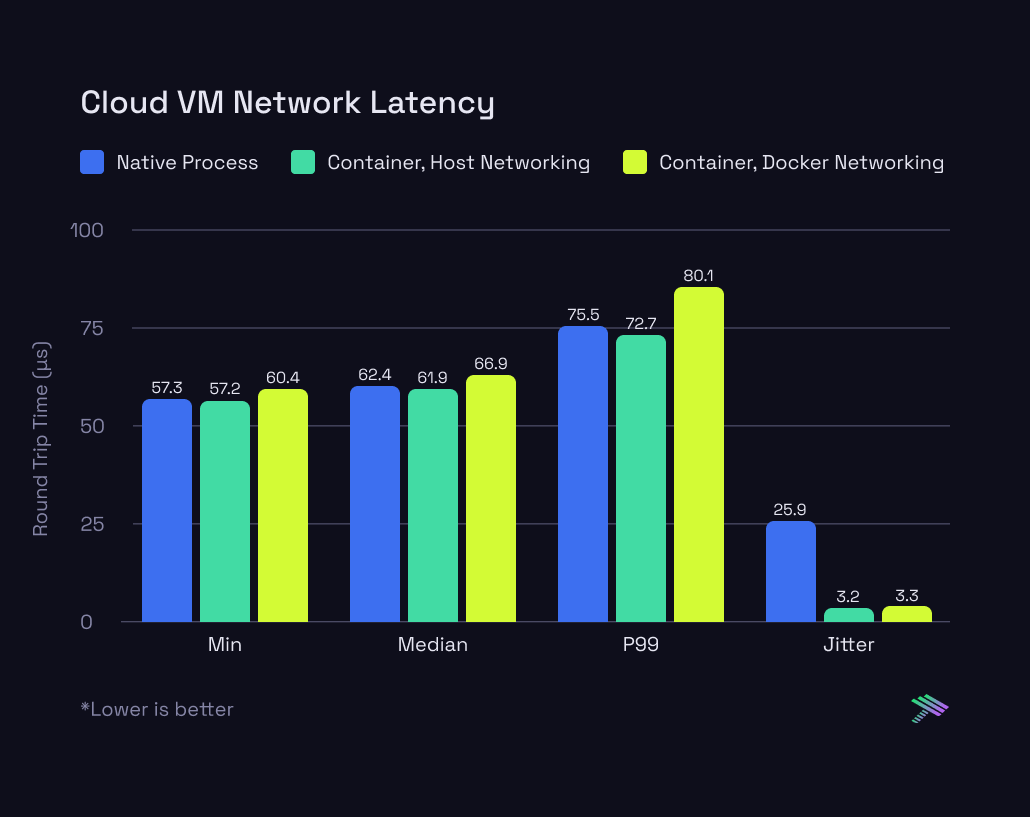

Container networking added an average latency of 5µs (microseconds) to the P99 measurement within a data center. When tested over larger distances (500+ miles via the Internet), variance between tests of the same configuration was higher than the delta between configurations.

In our observations, containers introduce no compute tax, and while there is a network performance tax, it's so minimal that variance introduced by the Internet has an order of magnitude more impact.

Read on if you want to understand how we conducted the testing and the in-depth data.

Testing Configurations

Our testing environment included both bare metal and cloud VM configurations, featuring:

- Bare Metal

- AMD 4th Gen EPYC 32-core processor, 3.8 ghz with SMT disabled

- 128GB RAM

- 2TB NVMe disk

- 50 Gbit/s NIC

- Cloud VM

- AMD 4th Gen EPYC 32-core processor, 3.7 ghz with SMT disabled

- 64GB RAM

- 8GB high-performance networked SSD

- 12.5 Gbit/s NIC

Both setups used Ubuntu 22.04 and we tried to keep the number of CPU cores the same. However, because of the limited options for VM configurations, we couldn't keep other specs like RAM, disk, and network card the same. This means we can't directly compare the bare metal setup to the cloud setup. But, we can still learn a lot about how containers affect each system on their own.

The Investigation Begins

Our exploration focused on native versus containerized processes, covering both compute and network performance aspects.

Compute Performance

We utilized the PassMark PerformanceTest to evaluate a spectrum of computational tasks across four distinct setups:

- Bare Metal

- Native processes

- Containerized processes

- Cloud VM

- Native processes

- Containerized processes

Here’s what the CPU Mark score (which is an aggregate score across all sub-tests) looks like for each testing configuration:

The outcome was unexpected — the performance was almost identical! Containers ran just 0.12% slower than native processes on the physical server and were actually 0.08% quicker on the Cloud VM. Such minor differences aren't enough to be meaningful, so for all intents and purposes, they performed equally.

Now, let's zoom in on the specific results from the PassMark tests:

The bare metal results are extremely consistent – whether the workload is running inside a container or as a native process, there’s effectively no difference. The cloud VM results are interesting though. There’s a lot more variance between running in a container versus native process. However, which one is more performant flip flops based on the specific test that’s being run. There’s as much as a 10% swing in either direction which stays true across multiple runs with the same setup. There’s many reasons why this could be the case and we plan to do a follow up piece diving deeper on these differences.

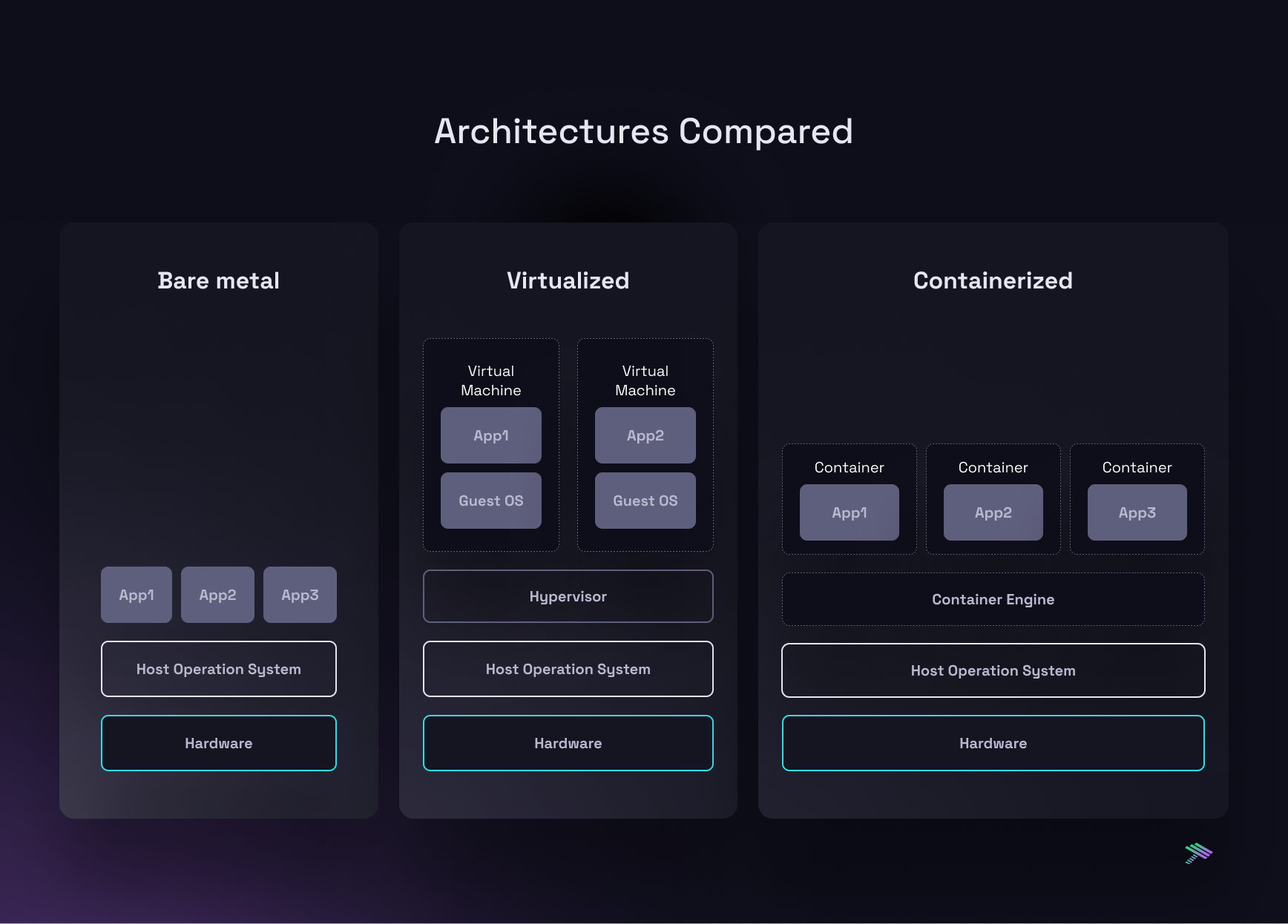

At the end of the day, containers are just Linux processes with an isolated file system and network (instead of just virtual memory). They run natively on the host kernel with no instruction translation tax, unlike VMs. So it makes sense that we didn’t observe any performance difference on the compute front.

But containers do introduce an additional layer of processing on the network side, so let’s see how network performance stacks up.

Network Performance

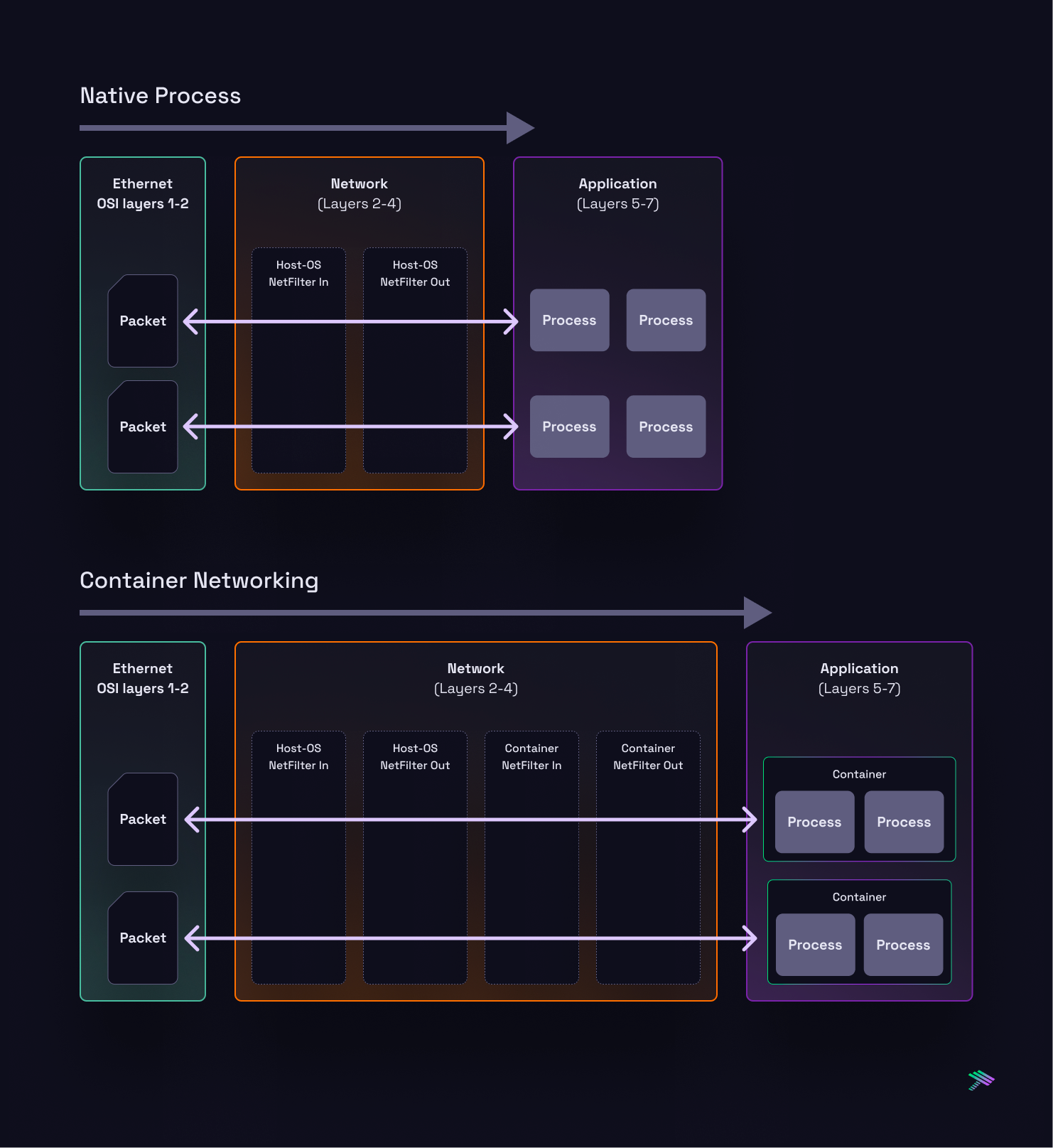

Let’s take a closer look at the exact path a packet takes from when it arrives at the network card until the application receives it.

Without containers, there’s minimal processing occurring in Layers 2-4 before the packet gets served up to the application. Once the container networking interface is introduced, there’s a few more hops the packet needs to take before arriving at the destination. Given these additional hops, it’s pretty obvious that there will be a performance tax, so the questions we sought to answer are: how much, and does it matter?

For the gaming use case, latency and jitter are of utmost importance. So, we narrowed our network performance evaluation to these parameters. Utilizing a custom-built Go client and server, we measured round-trip times across 1 million UDP pings between two nodes within the same data center to minimize external interference.

We ran the tests in 3 configurations across both bare metal and cloud VMs:

- Go server running as a native process

- Go server inside a container with “host” networking (

--network="host"flag in Docker) - Go server inside a container with Docker networking

Here are the results:

Our measured P99 delta is ~5µs, (5 millionths of a second). Internet latency is typically measured in milliseconds (thousandths of a second), so this is orders of magnitude smaller than any relevant latency measurement used on a global scale. To make sense of this, latency is typically a function of the distance between the client and server. When clients are far away, the expected connection latency is high, and vice-versa, latency is small when clients are close. The distance between a host and its containerized workload is zero, so this overhead only comes from the minuscule extra routing of traffic to a private containerized workspace within the kernel. There is no practical difference in client latency between native processes and containerized processes.

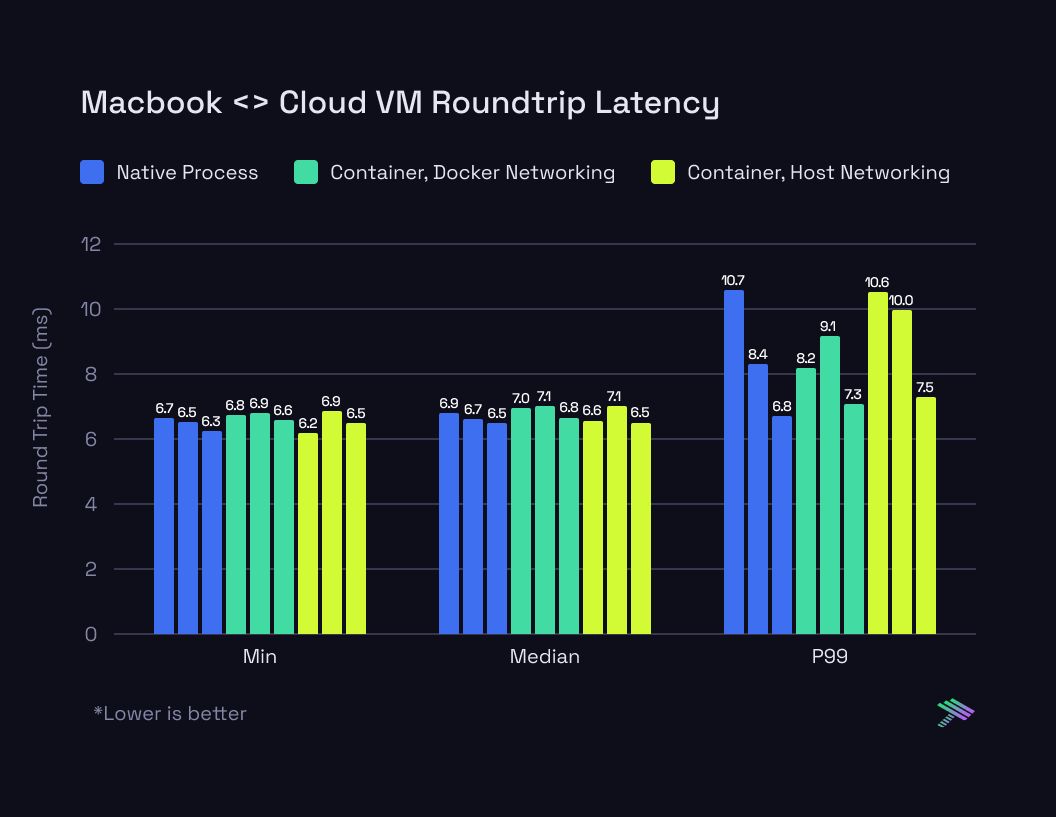

Ok, so how does this translate to traffic traversing the internet? We wanted to verify that the 5 microsecond delay in the container networking stack doesn’t result in a more meaningful delay when packets need to travel further. So we modified the test slightly to run the client on a Macbook in the office while running the server in the same 3 modes (native, container networking, container with host networking) on the cloud VM.

Here are the results:

The Internet adds an order of magnitude more variance (measured in milliseconds instead of microseconds) to the latency than what the container network interface introduces. We ran each mode 3 times, and the results between each run vary drastically. In some cases, the container workloads are faster than the fastest native processes, but the likely cause for that is congestion at some other hop along the way.

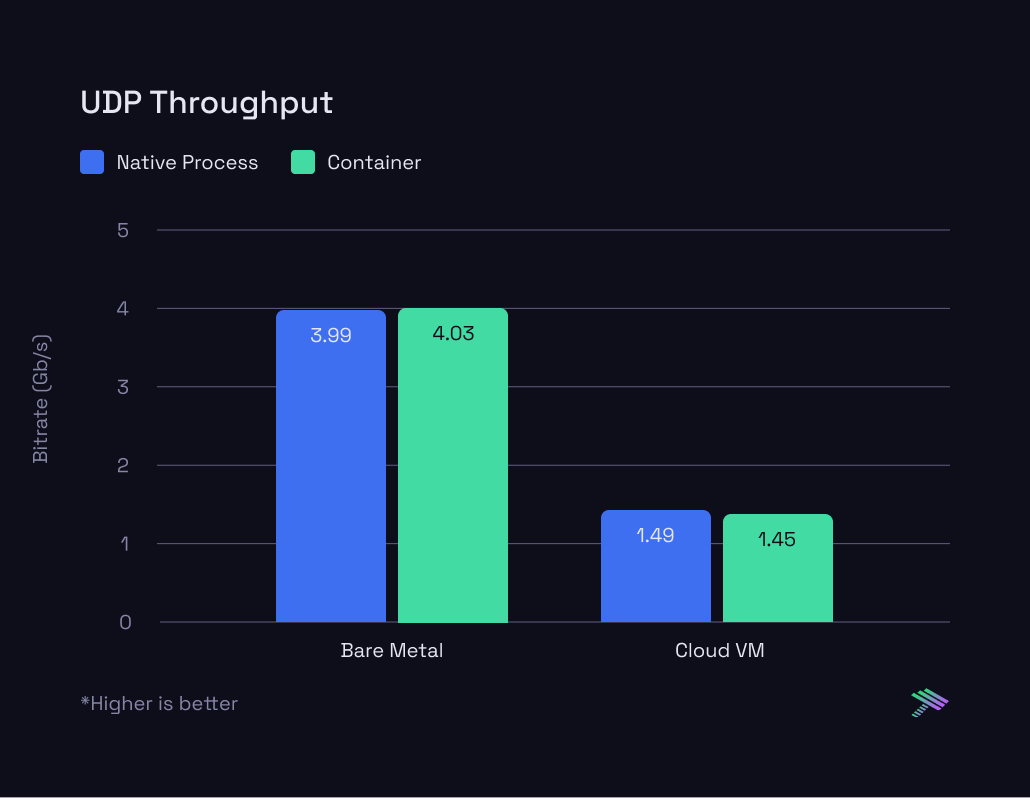

Lastly, we also ran a throughput test to see how much UDP traffic can be pushed before datagrams start getting dropped. We used iperf3 for the tests, running the server as a native process and inside a container. The client was run with these args: iperf3 -c $SERVER_IP -u -t 60 -b 25G which attempts to push 25Gb/s for 60 seconds to the server.

Here are the results:

Similar to the other tests, we see that there’s very little impact to throughput as well.

At the end of the day, it is true that container networking introduces a performance tax to network performance. However, as these results show, the impact is imperceptible and is completely negated by the variance added by the Internet.

What's Next

At Hathora, we’ve been battle testing containers in one of the most performance intensive use cases – gaming. Our findings in this deep dive give us even more confidence that the performance cost of containers is remarkably low. Despite the critical need for speed in gaming, where even milliseconds matter, our results show that containers hold their ground admirably against traditional setups. The negligible network delay, once a concern with container networking, now appears as a minor hiccup rather than a deal-breaker, affirming containers' place in latency-sensitive applications.

However, our journey into container performance is far from over. While our initial forays have been promising, showing minimal impact on both compute and network fronts, questions linger—especially around the nuanced effects of Docker networking on system performance. These initial results serve as a stepping stone for what’s more to come. As we continue to peel back the layers of container technology, we aim to furnish the gaming and broader tech communities with concrete data and analysis. The path forward is clear: more in-depth exploration and rigorous testing to carve out definitive conclusions on container overhead, ensuring that as we push the boundaries of what's possible in gaming technology, we do so with both eyes open.